What If Scientific Impact Could Be Negative?

December 16, 2019

Originally published on Economics from the Top Down

Blair Fix

Scientists live and die by their scientific ‘impact’. For the uninitiated, ‘impact’ is a measure of a scientist’s contribution to their field. While there are many measures of scientific impact, almost all of them focus (in some way) on citations. So if more people cite your papers, you have more scientific ‘impact’.

The idea behind counting citations is that they quantify other people’s engagement with your ideas. It’s similar to counting ‘likes’ on social media. And as with social media, there is (currently) no such thing as a scientific ‘dislike’. There is no such thing as a negative citation.

But what if there was? What if citations could be both positive and negative? This, I think, would throw a much-needed wrench in how we measure impact. It would be uncomfortable for researchers, but probably good for science.

Not all press is good press

The idea behind negative citations is simple — we need to distinguish between praise and criticism. If I cite a paper while praising it, this should count as a positive citation. But if I cite a paper while criticizing it, this should count as a negative citation.

Similarly, if I test a theory and find empirical support, my citation to the theory paper should be positive. But if I test a theory and find no empirical support, my citation to the theory paper should be negative.

The use of both positive and negative citations is, I think, more in line with how science is done. We don’t judge theories solely by the number of times they’ve been tested. Instead, we judge theories by the cumulative weight of evidence, both good and bad. So a theory that is well tested — yet uniformly found to be wrong — does not count as a ‘good’ theory. It is a theory that should be abandoned.

What if we judged the scientific impact of individuals no differently than how we judge a scientific theory? We would add positive citations and subtract negative citations, just as we weight evidence for and against a theory.

So when I write a paper that is critical of human capital theory, my references to human capital papers should count negatively to their citation count. Similarly, when someone tests revealed preference theory and finds no support, their references to revealed preference theorists should count negatively.

Allowing citations to be both positive and negative would completely change how we measure scientific impact. But would it be for the better? I have mixed feelings.

Problems with negative citations

You’ve likely thought of some problems with negative citations. An obvious problem is that we need algorithms that can distinguish between a positive and negative citation. This would require natural language processing and the ability to parse context. Understanding the positive/negative context of a citation is certainly a difficult task. But given the success of natural language parsers like Watson, this type of algorithm isn’t a pipe dream.

There’s also the problem of how we decide what counts as a positive or negative citation. I recognize that there’s a subjective element to this decision. And many citations may be neither positive nor negative. They’re what I call ‘citation dumps’ — an obligatory dump of information, referenced without comment. Maybe citation dumps should count neither positively or negatively to citation counts?

The more I think about actually implementing a positive/negative citation scheme, the more difficult it seems. But still, it would be interesting to at least try to distinguish between positive and negative citations.

The biggest problem with negative citations, however, is not creating the algorithm. Instead, it’s a much deeper problem that plagues all of science. We call it the tyranny of the majority.

Suppose a majority of researchers all believe in a certain theory (that is wrong). The person who advances an alternative (correct) theory will initially face a torrent of negative citations.

I don’t know a way to fix this problem. It’s just a fact that all new ideas start with few proponents, and must battle the inertia of the establishment. Granted, most new ideas are wrong. But some are right.

When science works as it should, correct ideas win the day. But the battle over new ideas can take a long time. History is littered with correct ideas whose progenitors died before the idea gained acceptance. The classic example is Alfred Wegener, who first proposed the idea of continental drift. Wegener’s ideas were ridiculed during his lifetime. But 30 years after Wegener’s death, the consensus changed. Continental drift is now an established fact.

Although I like the idea of negative citations, I worry that it would exacerbate the tyranny of the majority. Would the spectre of a negative citation make young researchers less likely to challenge authority? Would negative citations foster groupthink? I honestly don’t know.

While I worry about these problems, I think negative citations would allow fascinating analysis of scientific progress. Take Thomas Kuhn’s idea of a paradigm shift. This happens when an accepted set of ideas (like Newtonian physics) is replaced by a new set of ideas (like quantum physics). I think it would be fascinating to measure paradigm shifts using negative citations.

Measuring paradigm shifts with positive and negative citations

Let’s imagine how using negative citations might quantify a paradigm shift.

We start with an accepted paradigm that has many citations, most of them positive. Then something happens to trigger a paradigm shift. Scientists grow more critical of the old paradigm, and more negative citations crop up. Eventually, almost all references to the old paradigm are negative.

Measured using positive and negative citations, the death of an old scientific paradigm might look like this:

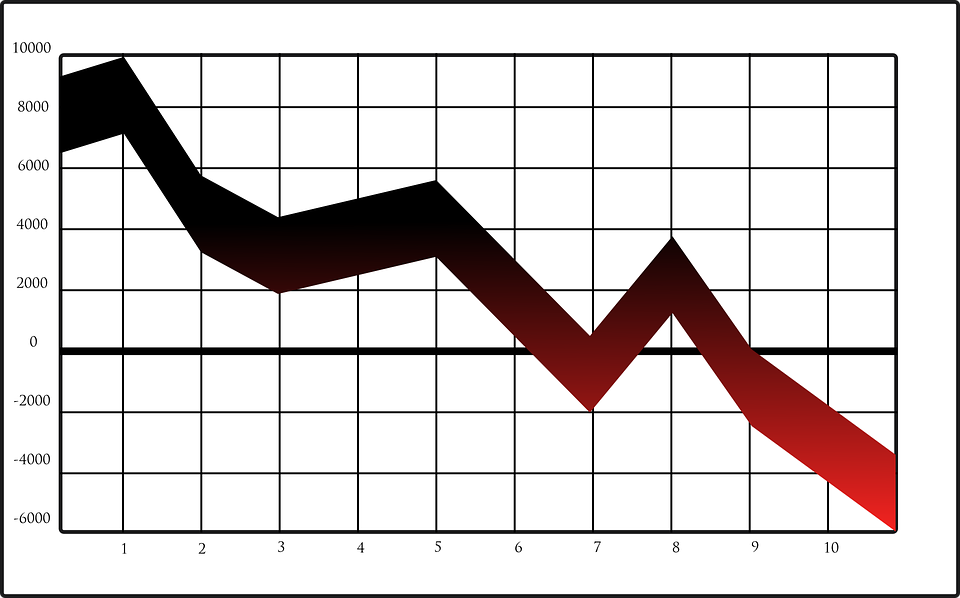

At the same time that the old paradigm dies, a new one is born. This new theory starts off with no citations. Then, as the old guard learns of it, the new paradigm gets a torrent of criticism. So its citation count goes negative. But over time, the new paradigm gains acceptance and the citation count turns positive.

Measured using positive and negative citations,the birth of a new paradigm might look like this:

It would be fascinating to apply this accounting to real theories. It makes me wonder what we’d find.

Negative citations are fine for theories … but what about people?

I have mixed feelings about applying negative citations to people. On the one hand, I would be ecstatic if the citation count of human capital theorists (I won’t name names) went deep into the red. But I wouldn’t want to be on the receiving end of a torrent of negative citations.

But while negative citations may be uncomfortable scientists, my gut feeling is that they would be good for science.

I’d like to hear your thoughts. Are negative citations a good idea? Or am I off my rocker?