Tribalism in Science (and Economics)

February 10, 2020

Originally published on Economics from the Top Down

Blair Fix

If you ask the average person what ‘science’ is, they’ll probably answer something like ‘it’s what we know about the world’. To the lay person, ‘science’ is a body of facts.

To the trained scientist, however, ‘science’ means something different. It’s not a body of knowledge. It’s a method for determining what’s true and what’s not. To determine the way the world works, science appeals to evidence.

The ideal of science is beautifully summarized by the motto of the Royal Society: nullius in verba. It means ‘take nobody’s word for it’. In science, there is no authority. There are no gods, no kings, and no masters. Only evidence.

In this post, I reflect on how ‘taking nobody’s word for it’ cuts against some of our deepest instincts as humans. As social animals, we have evolved to trust members of our group. Among these group members, our instinct is to ‘take their word for it’. I call this the ‘tribal instinct’.

When we do science, we have to fight against this tribal instinct. Not surprisingly, we often fail. Rational skepticism gets overpowered by the instinct to trust members of our group. If the group happens to be powerful — say it dominates academia in a particular discipline — then false ideas get entrenched as ‘facts’.

This is a problem in all areas of science. But it’s a rampant problem in economics. The teaching of economics is dominated by the neoclassical sect, which has managed to entrench itself in academia. Among this sect, I believe, tribal instincts trump the rational appeal to evidence.

Recent empirical work highlights this fact. Neoclassical economists, it seems, pay deference to fellow members of their sect. But before getting to the fascinating empirical results, we’ll take a brief foray into evolutionary biology. This will help us understand why skepticism and the rational appeal to evidence so often get trumped by the tribal instinct to believe members of our group.

Our evolved sociality

While economists like to pretend otherwise, humans are social animals. We spend the vast majority of our lives in groups, and much of our time is spent negotiating social relations. Like all other social animals, we’ve evolved to behave this way. Sociality is an instinctual behavior.

In my opinion, the best explanation for the evolution of sociality comes from

multilevel selection theory. Like orthodox Darwinism, multilevel selection theory appeals to ‘survival of the fittest’. The difference, however, is that multilevel selection looks at many ‘levels’ of selection. Orthodox Darwinism, in contrast, is concerned only with selection between individuals. One individual out-reproduces another, and so passes more of its genes to future generations.

Multilevel selection keeps this selection between individuals, but adds other units of selection — both smaller and larger. At the smaller level, there is selection between cells within organisms. This explains how multi-cellular organisms evolved in the first place. At the larger level, there is selection between groups of organisms. This explains how sociality evolves.

To explain the evolution of sociality, multilevel selection proposes that there must be competition between groups. The fiercer this competition, the stronger the selection for sociality. Like Darwin’s original theory of natural selection, the premise of this group selection is simple. Here’s how David Sloane Wilson and E.O. Wilson put it:

Selfishness beats altruism within groups. Altruistic groups beat selfish groups. Everything else is commentary. (Source)

If there is strong competition between groups (especially if larger groups beat smaller groups), we expect sociality to evolve. The catch is that strong selection at the group level seems to be rare. We infer this because a minuscule fraction of all species are eusocial (or ‘ultra-social’).

Yet when group selection does happen, it produces potent results. Ultra-social species may be rare (in terms of the number of species), but they are spectacularly successful. In The Social Conquest of the Earth, evolutionary biologist E.O Wilson observes that the few ultra-social species that do exist — bees, ants, termites … and humans — dominate the planet (in terms of biomass).

Natural selection for tribalism: ‘taking somebody’s word for it’

With group selection in mind, let’s think about how the human instinct for tribalism — ‘taking somebody’s word for it’ — might evolve.

Imagine that you’re a member of a small tribe of hunter gatherers. A member of your tribe returns from a scouting mission and warns that a rival tribe is about to attack.

What do you do?

Do you take the scouts’ word for it? Or are you skeptical until you see the evidence first hand?

Let’s imagine how these two options might play out.

If you (and every other member of the tribe) take the scout’s word for it, then you immediately prepare for battle. If the scout was lying, the worst thing that could happen is that your tribe needlessly prepares for battle. This wastes your time, but little else. But if the scout was telling the truth, your tribe potentially avoids a devastating defeat (worst-case scenario … everyone dies).

Now imagine your tribe is filled with skeptical scientists. You (and every other member of the tribe) take nobody’s word for it. Seeking first-hand evidence that a rival tribe is actually approaching, each tribe member leaves the camp undefended. If the scout was lying, you avoid needlessly preparing for battle. But if the scout was telling the truth, your tribe potentially gets massacred by its rival. Viola, your tribe of skeptics is eliminated from the gene pool.

If we repeat this scenario a few hundred thousand times, we can see how selection for trust of group members would occur. The groups that follow the ideals of science — ‘taking nobody’s word for it’ — slowly get wiped out. The groups that reject the ideals of science and ‘take a tribe-member’s word for it’ win out.

The point I want to make with this parable is that in many situations, trust of group members is a more adaptive trait than rational skepticism. This is certainly the case with warfare — violent competition between groups. And as Peter Turchin convincingly argues, warfare may have been the driving force behind human sociality. Warfare rewards groups that are able to function collectively, and punishes those that are not.

Tribalism in science

In light of the evolution of sociality, it’s not surprising that humans have an instinct to ‘take the word’ of fellow group members. What is surprising is our capacity for rational skepticism. Clearly we do have the ability to ‘take nobody’s word for it’. Science depends on this ability. But it’s by no means our dominant instinct.

Doing science, I argue, is a precarious act. The scientist must foster rational skepticism and suppress the instinct to conform to the ideas of the group. At the same time, testing scientific theories often requires large-scale cooperation. Experiments in particle physics, for instance, involve the cooperation of thousands of people. These scientists must maintain skepticism while simultaneously having faith in the actions of fellow group members.

This balancing act can easily veer in the wrong direction. Thus we should not be surprised when tribalism prevails, and false ideas get ensconced as ‘facts’. And we should celebrate (because of its improbability) when the rational appeal to evidence wins the day.

Measuring tribalism in science

Here’s a fun idea: what if we scientifically studied tribalism in science? It would be quite simple to do. To study tribalism, we’d measure the degree to which scientists hold the following ideals:

Ideal of Tribalism: Take group members’ word for it.

Ideal of Science: Take nobody’s word for it.

Of course, we can’t directly ask scientists which ideal they hold. They’ll almost certainly respond that they respect the ideals of science. The problem of tribalism, I suspect, is an unconscious one. Scientists know (or at least profess to know) that they should respect evidence. But tribal instincts get in the way. For instance, a scientist might selectively interpret evidence (or even ignore it entirely) based on the ideas of his/her group. In most cases, the scientist will be unaware of what’s going on.

To test for tribalism, we need to measure unconscious bias. Here’s one way we could do it. We test if scientists’ agreement with a given statement is affected by its attribution.

If tribalism dominates, we expect scientists to agree with a statement if it is attributed to a member of their tribe. Conversely, they should disagree with the same statement if it is attributed to a non-member of their tribe.

Here’s a fun example applied to the most tribal of human activities — organized religion. Suppose we asked American Christians if they agree with the following statements.

“God is great.” — Pope Francis

“God is great.’ — Osama Bin Laden

My guess is that agreement would drop precipitously when the statement is attributed to Osama Bin Laden. His ‘God’, after all, is not the Christian God.

Conversely, suppose we asked physicists if they agree with the following statements:

“The Earth is round.” — Stephen Hawking

“The Earth is round.’ — Donald Trump

Because there’s overwhelming evidence that the Earth is round, we expect 100% agreement with both statements. In other words, the evidence trumps physicists’ loathe for Trump (who is clearly not a member of their physics tribe).

These are toy examples, meant to illustrate the extreme between tribalistic and scientific ideals. But they illustrate a point. If the ideals of science dominate a discipline, attribution shouldn’t affect scientists’ agreement with a statement. Conversely, if the ideals of tribalism dominate a discipline, attribution should strongly affect agreement with a statement. Scientists should be more likely to agree with a statement if it’s attributed to a member of their tribe.

Measuring tribalism in economics

This brings us to the results that you’ve been waiting for — the evidence of tribalism in economics.

Mohsen Javdani and Ha-Joon Chang recently conducted a survey of economists that mirrors the logic described above. Javdani and Chang asked economists if they agreed with a given statement. With one exception, the statements came from members of the mainstream tribe in economics (the neoclassical sect). But unbenounced to the economists, Javdani and Chang randomly switched the attribution of the statement from its original (neoclassical) source to a heterodox (non-mainstream) source. Javdani and Chang then tested how this change in attribution affected economists’ agreement with the statement.

Before getting to the results, let me frame my expectations. After studying economics for a decade, I’ve come to believe that the field is incredibly tribal. It’s dominated by a sect of neoclassical economists. Among these economists, deference to authority (prestigious members of the tribe) is everything. Evidence is an afterthought. In-house critic Paul Romer puts it this way:

Progress in the field [of economics] is judged by the purity of its mathematical theories, as determined by the authorities.

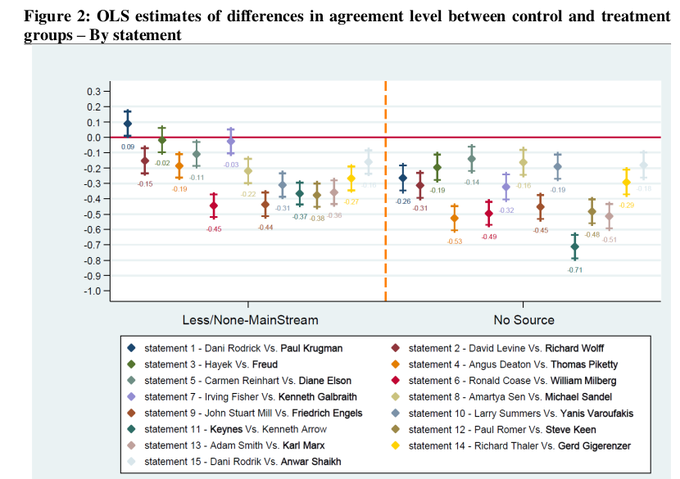

Given my experience in economics, I wasn’t surprised when I read Javdani and Chang’s results. They found that agreement with a given statement strongly depended on its attribution. Economists were more likely to agree with a statement if it was attributed to a mainstream source versus a heterodox source. Here’s their results:

Evidence for tribalism in economics. This chart shows the difference in economists’ agreement with a statement when attribution was changed from a mainstream to a non-mainstream source. The table below shows the economists to which each statement was attributed. The first name is the actual source. The bold name is the non-mainstream economist. Source: “Who Said or What Said?” by Mohsen Javdani and Ha-Joon Chang.

To interpret this chart, look at the sign of the plotted point. If it’s positive, agreement increased when the statement was attributed to a non-mainstream source. If it’s negative, agreement decreased. All but one points are negative, meaning economists agree more with a statement if it is attributed to a mainstream economist.

These results nicely demonstrates how tribalism dominates economics. Most economists are members of the neoclassical tribe. And rather than ‘take nobody’s word for it’, economists preferentially take fellow tribe-members word for it.

Rationalizing tribalism

Perhaps even more interesting than Javdani and Chang’s results, is neoclassical economists reaction to these results. I recently posted the above figure on Twitter, causing a bit of a firestorm. Here are some of the reactions:

Many of the heterodox economists listed are … known to not know much of value so brand name capital is either minimal or negative. Real finding of paper is there aren’t ten heterodox economists that are even well known.

(Source)

The Econ Nobel is a good indicator of being within the scientific consensus, if anything it’s less of an appeal to authority than trusting a random heterodox academic on economic because “they’re an economist”. (Source)

I’m … not sure that reliance on authority is necessarily fallacious if authority only granted on basis of expertise and judgment earned through years of careful study. (Source)

Rather than refute the study’s findings, these reactions demonstrate that mainstream economists know little about the ideals of science. Among members of the tribe, deference to authority is the ‘rational’ course of action. And we all know how neoclassical economists worship rationality.

A broader problem?

Lest we be too hard on economics, I think Javdani and Chang’s findings would probably replicate in other social sciences, and to a lesser extent in the natural sciences. But I suspect that the results would be less spectacular. My experience is that economics is far and away the most tribal of disciplines.

I’d like to see this type of research extended to all disciplines, and studied over time. Given our evolutionary heritage, we should expect to find tribal instincts at work in science, even when scientists profess to respect only the evidence.

The ideal of science — to take nobody’s word for it — contradicts our social instincts. It’s far easier to believe people we trust — members of our tribe. That science works at all is something we should marvel at. And when we fall short of the ideals of science, we shouldn’t obfuscate. We should admit that we’ve given in to base urges, and that we need to do better.